Social Media Moderation – The Ultimate Guide to Watch In 2026

Author: Wagisha Adishree

10 minute read

Believe it or not, social media moderation can make or break your business. Whether you are a PR strategist, a social media manager, or a digital marketer trying to manage your brand presence online, knowing how to use social media content is essential.

Social media platforms are not just channels where a brand discusses its products and services. When a brand has an online presence, it is more than just posting online and managing its presence.

This guide will define social media moderation and how brands can leverage it to improve their brand communication. So, without further ado, let us get into it.

What Is Social Media Moderation?

Social media moderation is the process of overseeing and managing content on social media platforms, websites, digital displays, and more. This involves actively monitoring debates and discussions to identify and remove harmful content or offensive content that platform guidelines, local laws, and social norms. Moderation plays a crucial role in enforcing guidelines, such as examining UGC and taking appropriate actions, like deleting, flagging, and removing unwanted content. They are also responsible for handling crises, responding to customer issues, and maintaining brand images.

Types of Social Media Moderation

There are five significant types of Social Media Moderation. Take a look.

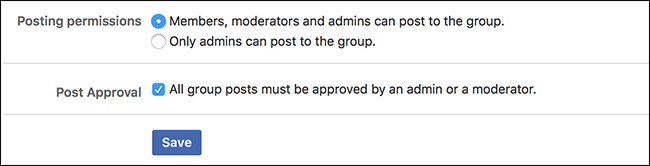

Pre moderation

In this type of moderation, all content undergoes moderation before publishing on the website. Pre-moderation gives the moderator a good sense of control over the content. The user has to wait after posting the content until the moderator critically reviews it for approval.

Post Moderation

Post moderation is where content is moderated after it has been posted. Users can post whatever they want, but it is queued for moderation. The human moderators will remove the content if it is flagged or inappropriate.

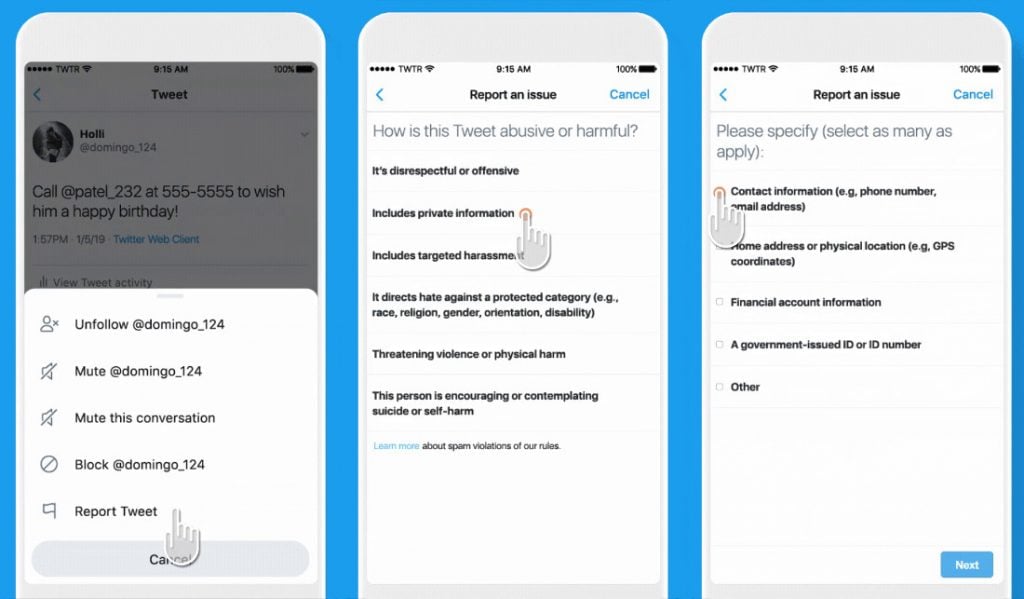

Reactive Moderation

Reactive moderation is a moderation approach in which the moderator responds to user reports or complaints of inappropriate or harmful platform content. Users can flag content they believe is inappropriate or violates the platform’s guidelines. This method typically relies on human moderators to monitor chat channels and user reports to be actioned by moderators or forum administrators.

Hybrid Moderation

Hybrid moderation is a mox type of moderation. It’s a mix of all the types of moderation mentioned above. When a user posts their content, it undergoes an automated moderation. After this, the moderation process depends on the history of the users’ past posts. If the users consistently post good content without any issues, the moderation process will set the probability of the content being of good quality.

Hybrid moderation is a content moderation process that combines AI-powered tools with human moderators to manage content. While AI tools quickly scan potential violations, the human moderators make the final call.

Distributed Moderation

Distributed moderation is a content moderation method that involves users in deciding whether content is appropriate. This method uses a rating system in which community members vote on whether submissions align with the rules. This method allows the comments and forum posts to stay within the moderation rules.

Why is Social Media Moderation Important for a Brand’s Success?

Here is a quick breakdown of why social media moderation is essential for any brand.

Brand reputation protection

Moderation helps users swiftly identify potential brand crises caused by negative comments and hate speech. This further helps to remove inappropriate and offensive content and maintain a professional brand image. Active moderation allows the brand to reshape the conversation around its brand, responding to feedback and proactively addressing issues. Moderation can also involve showcasing the best content to enhance the brand’s reputation. Brands can pick and highlight the best content from their social media platforms to have the best first impression on their potential customers.

Trust building in online spaces

Moderation creates a welcoming environment where the users feel comfortable communicating and engaging with the brand. Besides, timely responses to comments and moderation show that the brand values its audience and is committed to providing excellent services.

Enhanced User Experience And Customer Satisfaction

Social media moderation directly impacts the user experience and customer satisfaction. A social media manager ensures the moderation workflow keeps safe and inviting, making it easier for users to engage comfortably with the brand and with one another. When brands actively manage comments and other forms of content, customers feel valued and heard.

Best Practices for Social Media Moderation

Regular Content Audits

Regular content audits are essential. This helps users identify patterns, trends, and potential issues. Users who analyze content metrics such as likes, shares, comments, and reporting rates gain valuable insights into user behavior and content dynamics.

Additionally, collecting and evaluating user feedback allows the moderators to understand community concerns and areas of discontent.

Implementing a routine review process can help refine moderation strategies and policies. This might involve setting up reviews where the moderation team analyzes data and identifies emerging issues.

Scalability In Moderation Efforts

With an increase in user engagement, moderation efforts must also scale proportionally. As platforms grow, adopting scalable solutions that can handle rising content without compromising quality is crucial.

Train Moderators

Once your moderators are hired, training is essential. Proper training ensures that moderators are familiar with the guidelines and know how to handle different situations. They need to be equipped with the skills to deal with various types of content, from simple misunderstandings to serious violations.

Training should include conflict resolution techniques and a strong foundation in the platform’s policies. This way, moderators can approach each situation with fairness.

Be Proactive

Practice moderation is key to creating a safe online environment. Instead of waiting for users to report violations, moderators should take the initiative to identify problematic content before it escalates. This means keeping an eye on trends and recognizing patterns of behavior that might indicate a potential issue. For example, moderators should step in if a particular user frequently posts aggressive comments or if specific posts are receiving an unusually high number of reports. Proactive measures help mitigate conflicts early, reducing the chances of user harm and preventing a toxic atmosphere from developing.

Collaboration With Law Enforcement

Platforms should partner with law enforcement agencies to address illegal activities—such as cyberbullying, threats, or criminal behavior. These collaborations are essential for enhancing response capabilities and ensuring compliance with legal requirements.

When platforms establish clear protocols for reporting illegal content to authorities, they can act swiftly on users’ complaints and assist in potential investigations.

Encourage User Reporting

Encourage users to report inappropriate or offensive content by providing easy-to-use reporting mechanisms and tools. Prioritize the review process for these reports to ensure that violations are added swiftly and effectively. Users can also create an environment where the creators would feel empowered to speak up but can help them create a safer and more respectful community. Other factors, such as the timely responses to user reports, enhance the trust and showcase the commitment to maintaining an atmosphere for all participants.

Promote Transparency

To create trust and accountability with our users, it is essential to actively promote transparency in our moderation practices. This means openly communicating the decisions made in moderation, explaining the reason behind these actions, and ensuring users understand the guidelines in place. Additionally, we should provide a clear channel for users to make decisions or offer feedback.

This eventually helps the community by also enhancing the user experience, showing that we value their input and are committed to fair and just moderation.

5 Best Social Media Moderation Tools in 2026

1. Social Walls – Best For Social Media Wall Content Moderation

Social Walls is a platform that lets users collect and display user-generated content from Instagram, Twitter, YouTube, TikTok, and other platforms on their events, digital displays, and websites. This platform excels in content moderation, allowing users to curate and showcase the best user-generated content.

Here is how Social Walls help in content moderation :

- Its AI-powered filtering system automatically identifies and removes duplicate content.

- Users can generate AI-powered tags based on visuals, allowing them to spot and manage aggregated content featuring the same visuals without manual effort.

- Users can rely on features like recommendation scores and sentiment analysis to highlight the most engaging content of your event.

- Block posts and comments based on keywords to maintain a consistent brand image.

2. Taggbox – Perfect Moderator For Social Media Widget

Taggbox is a social media aggregator tool that lets users collect, curate, and embed social media content on their websites. This platform allows users to create maximum impact and get more user-generated content by moderating helpful content using the moderation panel. Tagbox helps users have total control over the content posted on the widget. Users can quickly review the collected and curated content to ensure only relevant content reaches the top.

Here are the top moderation features Taggbox users can opt for :

- The Taggbox auto-moderation system uses advanced algorithm techniques to analyze content and make decisions based on predefined rules. The rules can be as simple as blocking content containing specific keywords or identifying hate speech.

- It also enables profanity, and spam filters will allow users to ensure they are not collecting and curating profane, abusive, and sexual content on social media widgets.

3. Hootsuite

Hootsuite offers large enterprises a central dashboard for scheduling and moderating social media content, providing efficient moderation tools. This platform helps with their real-time monitoring and automatic moderation.

Here are some ways users can use Hootsuite’s social media moderation.

- Users can add all their accounts to one Smart Moderation account, which lets them moderate all the content from one dashboard. This feature lets the users block unwanted users and spammers.

- Hootsuite claims to have an accuracy rate of 95% and is the social media monitor app for major brands.

- Hootsuite has a flexible post composer and scheduler with a bulk option- simplifying the most challenging part of the job.

4. Sprinklr

Sprinklr is a customer experience management platform that helps the business that aids businesses to improve customer experiences across all channels.

Here are some of Sprinklr’s moderation-based features.

- To moderate profanity, users can set rules to track, approve, or stop publishing content containing prohibited words.

- This also allows users to use a centralized dashboard to moderate comments on paid and organic pages and automate workflows to analyze the conversations.

5. Brandwatch (formerly Falcon.io)

Brandwatch, formerly known as Falcon.io, is a social media management and customer intelligence platform that helps brands.

Here are the top moderation features offered by Brandwatch:

- The platform helps users take a sentiment analysis check to determine the nature of the conversation.

- They also offer AI-intelligent alerts that detect spikes or drops in brand mentions.

- The platform also helps users to track conversations on Twitter and forums.

Conclusion

Social media moderation is not just a reactive measure but a proactive strategy that can significantly enhance your brand’s presence and reputation in the digital landscape. As a brand, implementing effective moderation practices safeguards your brand and creates a community where customers feel heard and valued. As we navigate through 2026, maintaining a positive online environment is essential since brand reputation has a lot to do with selling in the coming years.

We have provided all the information you need about social media moderation for your brand. Utilize them for your brand and see your business grow.